We would like to advise BEAR users of upcoming essential maintenance on cooling systems that will impact our services over Easter, in particular the HPC-facility, BlueBEAR, which will be unavailable for two weeks.

Background behind the maintenance

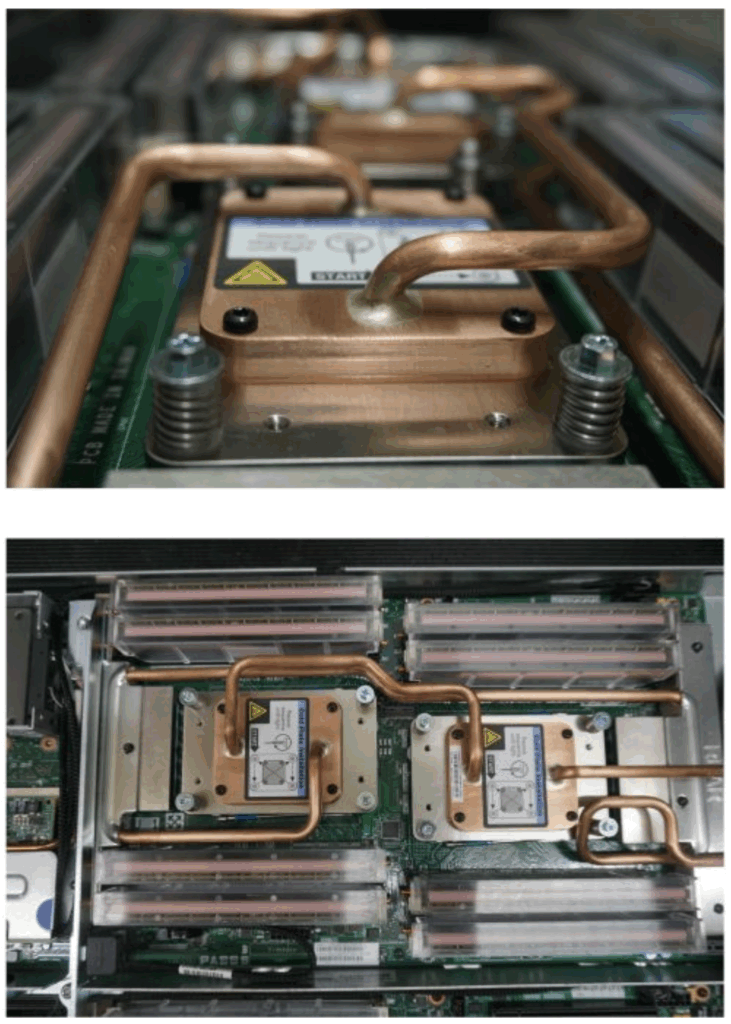

The Park Grange Data Centre is where the University’s High-Performance Computing systems, BlueBEAR and Baskerville reside, as well as the BEAR Research Data Storage (RDS) systems. The data centre relies on water-cooling for most of its operation, and there are several separate water circuits. One circuit provides ambient cooling for the room (Rear Door Heat Exchanger [RDHX]) and is required by all equipment in the room. Another circuit provides direct liquid cooling (DLC) to components internal to servers; this can only be used with specifically engineered servers and is the most efficient way to cool data centre hardware. This is required to support high-end CPU and GPU servers found in both BlueBEAR and Baskerville, and currently for VM’s that run on the BEARCloud service.

At the time it was installed this was one of the very first fully liquid cooled data centres in the UK and was very much a pathfinder system. After running for 7 years, both circuits are due a deep clean as part of a maintenance routine. We have engaged with several companies and have been advised that the only option is to drain, chemically treat, and flush the system. This process will take around two weeks to complete.

What is the impact for users?

We are expecting that BlueBEAR, which is a system cooled predominantly by DLC, will be inaccessible for the entire two-week period of water-cooling maintenance. After this time, we plan to start bringing BlueBEAR back in stages as it is cleaned and ready.

We are currently planning that BEARCloud VM’s, including BEAR hosted websites, will remain available during the maintenance period. See the section below for details on the other DLC service, Baskerville.

However, systems cooled by RDHX (i.e. RDS, Globus for BEAR Data Transfer) should be able to stay online. We hope to do this by distributing these services across two data centres. Although there is a considerable amount of work needed to achieve this, it is a good investment, as it will provide resilience into the future. For the duration of the planned works, we will be installing a temporary air-con system, which will have limited capacity. Therefore, we are only able to maintain certain critical services.

When is the maintenance work likely to happen?

The work has now been scheduled for the week commencing 30 March 2026 (over the University Easter holiday), with all services apart from BlueBEAR (and Baskerville) likely to remain available, but they should be considered at risk. Exact dates for any impacts on individual services will be confirmed nearer the time via service mailing lists.

Baskerville – end of service 30 March 2026

The Baskerville HPC-AI service will come to the end of its agreed 5-year service-life on Monday 30 March, 2026 when the system will be turned off for the last time. All data should be taken off Baskerville before 30 March.

UKRI will fund two new National HPC facilities (one GPU and one CPU-based). The facilities, known as National Compute Resources, will come online during the 2026/27 funding cycle.