On the 14th of September, 2021, we were excited to welcome visitors to the University from across the country to the official launch of our Baskerville Tier 2 HPC system, expected to rank in the Top 200 of HPC systems in the world.

Invited attendees included our technology partners in the project – OCF, Lenovo, NVIDIA and Intel plus representatives from the Partner Institutes – Diamond Light Source, the Rosalind Franklin Institute, the Alan Turing Institute and our funder EPSRC. We were also joined by some of our pilot and Access to HPC researchers on the system who were able to describe how Baskerville was enabling their research.

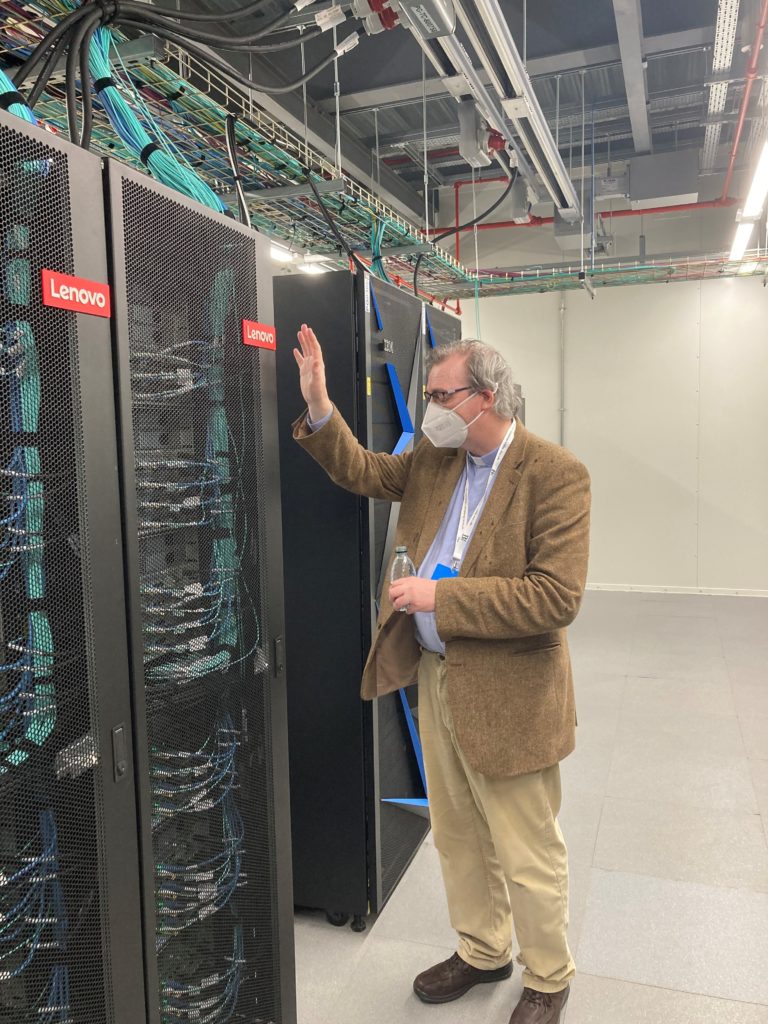

In total, we had 34 attendees, a very respectable number given the uncertainty over travelling and in-person events during the pandemic and the need to maintain social distancing in the venue. We were also fortunate to have Reverend Dr Jeremy Yates from UCL (left) join us to give his blessing to Baskerville and officially launch it into service.

After a welcome from Lead Architect Simon Thompson (Advanced Research Computing), Professor Iain Styles (Computer Science and Lead Academic) gave us the story of how Baskerville came to be built, describing the long history of research computing investments at the University of Birmingham and then moving on to discuss the three current needs behind Baskerville; 1) diversification of scientific computing to use of AI, 2) huge data volumes and 3) mixed/hybrid workloads, which involve classical modelling plus analytics.

Billy McGregor (EPSRC/UKRI) followed on with the background behind funding for Baskerville through the Tier 2 HPC and World Class Labs initiatives, as well as discussing future opportunities for funding in the Tier 2 HPC area. It was then back to Simon Thompson to describe the Baskerville technology – we are the first site in the UK to install the NVIDIA HGX-100 board with quad A100 GPU’s coupled to Intel’s latest CPU – Ice Lake. Baskerville contains 184 GPU’s in total, 5PB of spinning disk storage and 0.5PB of flash storage.

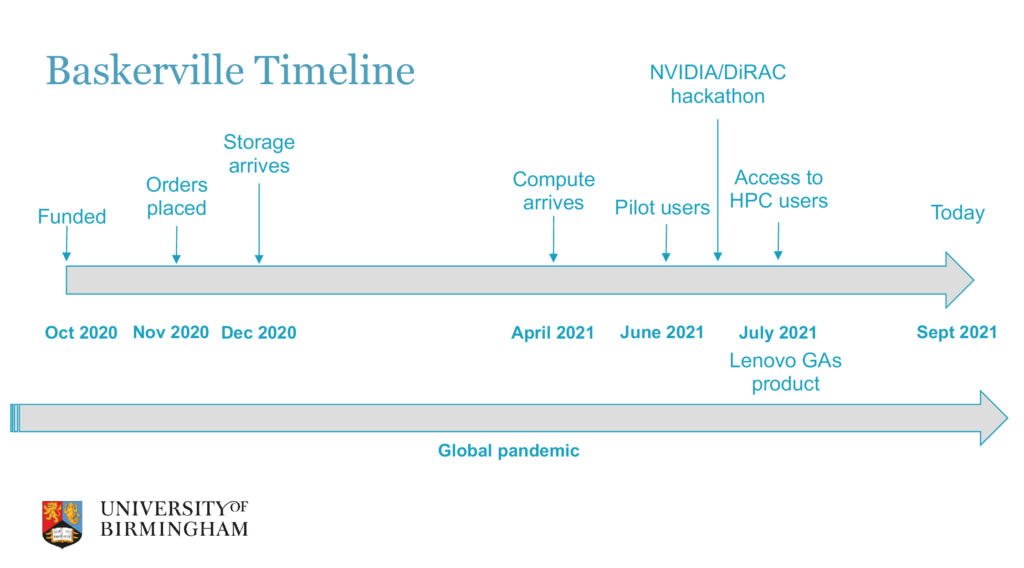

The team turned Baskerville round in record time, going from being funded in October 2020 to having pilot users on the system within 9 months (see timeline below). Early ship compute systems only arrived in April 2021 and was operational by June 2021 for pilot users – all whilst being in the middle of the pandemic with limited people on site to install the system. Use of the EasyBuild system provides automated tooling to build software (see Baskerville apps), dramatically reducing time spent and leverages the investments made in automated tooling for the University’s own BlueBEAR system. Monitoring of over 10,000 data points across the BEAR systems means that the systems team are alerted quickly about problems, whether at home or on site. Automated testing also speeds deployment by identifying any faults early in the bring-up process.

Kate Steele (HPC Client Executive, Lenovo) talked us through the challenges involved in delivering an early ship system and how it was only possible to install such dense compute because University of Birmingham’s research data centre uses Lenovo’s Neptune “direct to node warm water” cooling (another first for the UK) – each of the GPU’s consumes 400 Watts with the potential power draw of 1 rack being 76kW. Kate outlined the strategic partnership between Birmingham and Lenovo and how the two organisations work together to deliver cutting edge technology to our research community.

The efficient cooling system means a potential Top 200 HPC system can fit into just two racks.

We then heard from the pilot users of Baskerville about how it is enabling their research. First, Dr Hector Basevi (Intelligent Robotics Lab, School of Computer Science, UoB) described how he is using Baskerville to improve image-based deep learning for determining physical stability and talked us through the required technique of predicting unseen views of a space – a number of the BEAR team were excited as we can see “CSI” becoming a reality, where unseen camera angles from CCTV footage can be predicted. The calculations are extremely memory-intensive and training of the neural network takes a long time with input from 16 cameras. So far, Hector has found Baskerville to be fast, reliable and a pleasure to use – but more storage would be beneficial in the future!

Professor Matthew Foulkes (Imperial College, London), one of our EPSRC Access to HPC researchers, joined us remotely to describe his use of Baskerville in solving the many-electron Schrödinger equation using deep neural networks. Matthew doesn’t require a training dataset for his research as the learning is done via equations, using the Monte Carlo method (read more about Matthew’s research here). Without Baskerville, Matthew’s research in this area would not be possible as he needs massive amounts of storage and memory to increase the number of electron wave functions studied. The Access to HPC programme and Baskerville is enabling Matthew to be at the fore-front of this research topic!

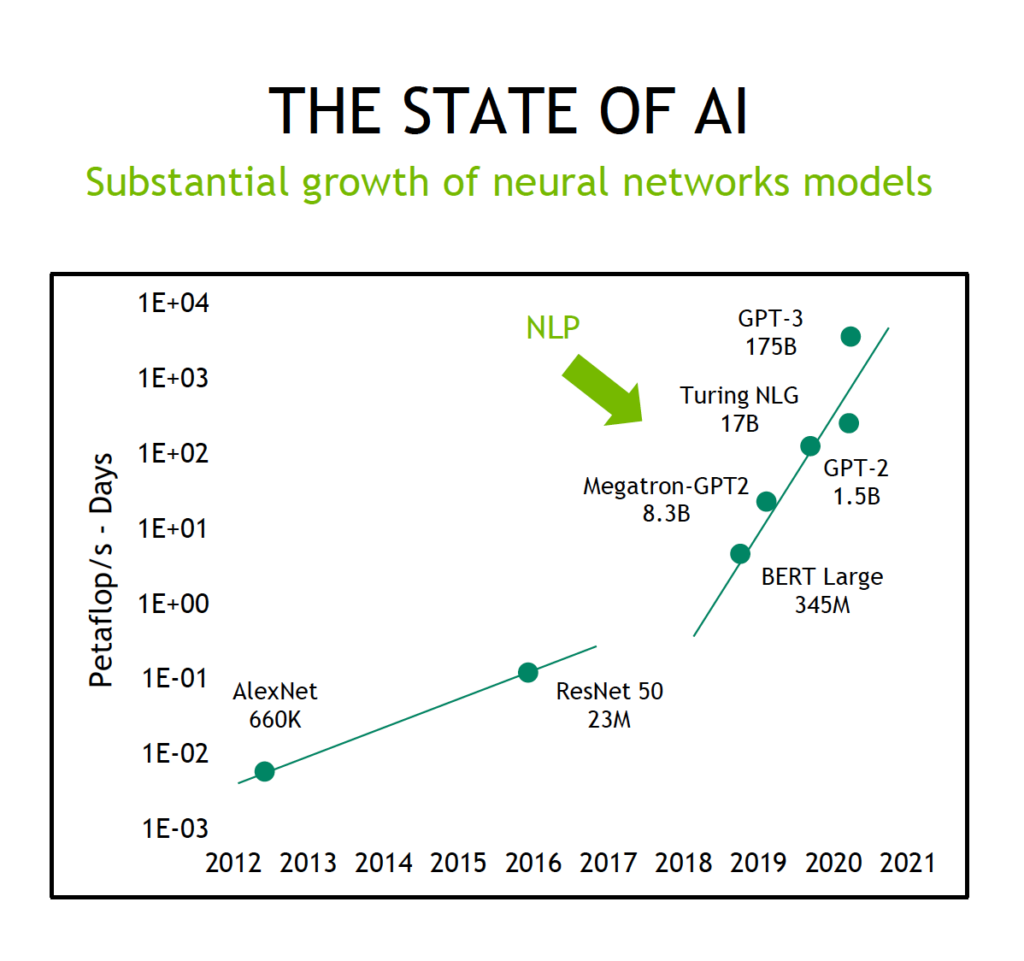

The last speaker of the day was Adam Grzywaczewski (NVIDIA), who discussed the dawn of self-supervised learning and its impact on AI. There has been a massive increase in the expansion of the use of AI models and their size, particularly in the last 18 months, which would require an order of magnitude more training data to improve the output data. Using supervised deep learning, it is estimated we would need 1,500 workers labelling up to 1 million images per month to say ‘this is a pavement’! Whilst a pavement is recognisable to many people, specialist disciplines such as radiography just don’t have sufficient specialists to provide labelled data sets for training. The resulting model is only as good as the training data that is entered into it, a self-supervised model will adapt better to new problems. By using unlabelled data which is easier to obtain, the dataset size can be increased and that will have a bigger impact than how the neural network is designed. Traditionally, un-supervised learning has needed huge amounts of computation power and it is only recently, with systems such as Baskerville, that this has become reliable. You can read more about AI research by NVIDIA and Facebook. Adam finished up with the challenges of large-scale training that will be involved with knowledge of systems, software and neural networks needed – training from NVIDIA will be arranged for users on Baskerville in the future.

It was an excellent set of talks highlighting the need for the Baskerville Tier 2 system and the huge potential areas of research it opens up for researchers from the Partner Institutes. You can find out more about the Baskerville system at https://www.baskerville.ac.uk/, including details on how University of Birmingham researchers can access it.

We’d like to thank Intel, Lenovo, NVIDIA and OCF for supporting the event and for their commitment to the successful delivery of Baskerville.