In September’s case study, we hear from PhD student Daniel Fentham from Computer Science, who has been making use of BEAR’s storage and data processing power, specifically using GPU’s to accelerate his research.

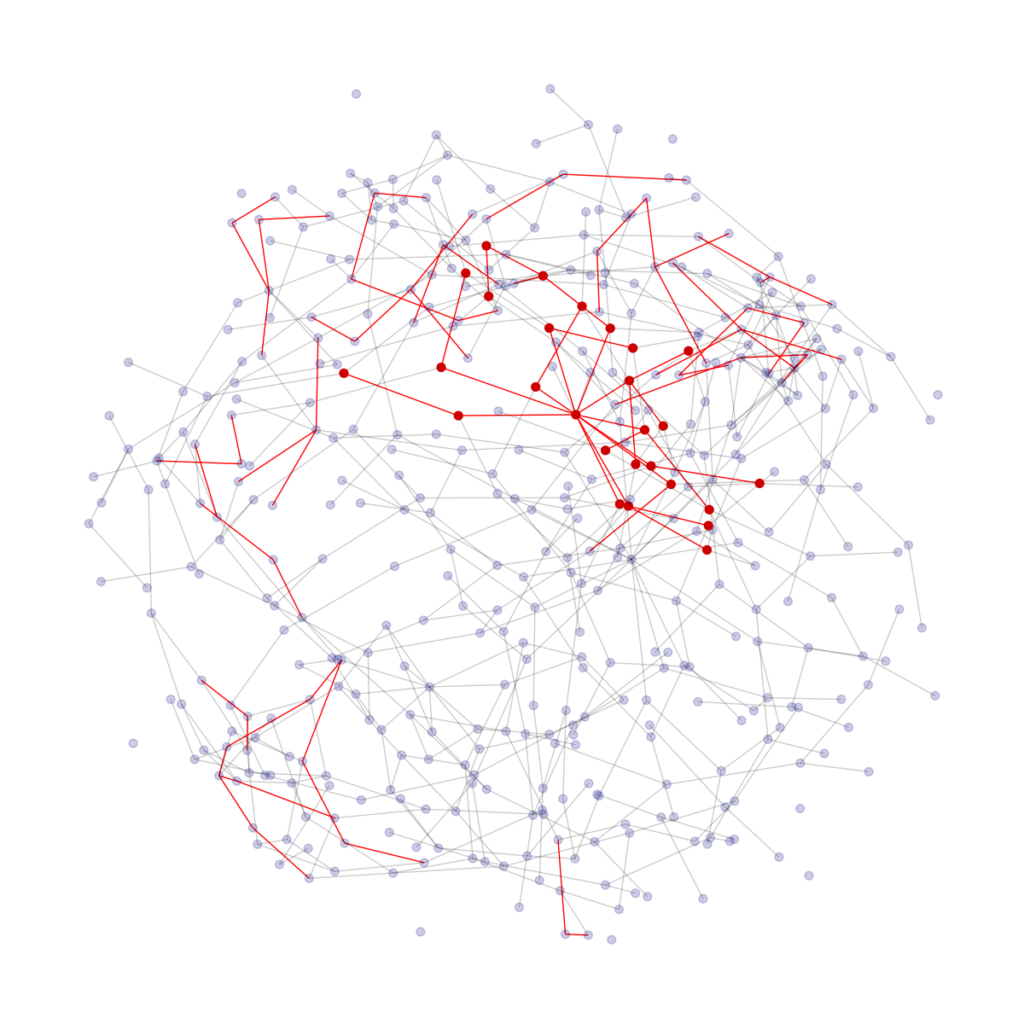

The project I’m currently working on is automated malware detection using Graph Neural Networks, which we’ve needed to create an entire pipeline for. As an overview, we start by downloading a dataset of Android applications which consists of over 100,000 apps as a dataset to train our model, then all of these apps must undergo some preprocessing to convert them to a form that can be read by a Graph Neural Network, then we need to train our model. Each stage of this is massively computing intensive, and in different ways, with the app preprocessing stage being CPU bound, and model training is GPU bound.

“The scale of the data we’re working on means that any kind of consumer compute is out of the question”

Why was BEAR chosen

The University’s supercomputer BlueBEAR was chosen because of the varied requirements that our system demands. We need both a scalable CPU compute solution that allows us to perform computations in a massively parallel way, but also have GPU compute that can train our models in a reasonable amount of time. Cloud compute resources of similar quality would be far too expensive for the project and having an all-in-one solution that can easily change from intensive CPU to GPU jobs is really useful.

How did it solve the issues?

BlueBEAR has solved our problems by giving us access to a flexible system that has both powerful CPU and GPU compute capabilities. Due to the large amount of intense pre-processing we have to do of our data, the ability to parallelise across many nodes is incredibly useful and has saved us a lot of time!

Measurable results of the service

The service has essentially made our project feasible by cutting down the time it takes to perform pre-processing on our dataset. Our dataset consists of 130,000 apps, and for each we need to decompile the apps and convert them to a form which is readable by a Graph Neural Network. Since we need to perform the same process on every app, this is a perfect situation for parallelisation! BlueBEAR allows a user to run 4000 concurrent jobs and this is something we take advantage of. By leveraging all of the compute nodes available to us we can pre-process 4000 apps at once and immediately start the next batch once they are complete. Our entire dataset can be processed in approximately 18 and a half hours. As a back of the envelope calculation, without parallelisation (which would likely be the case on a standard computer) this would take nearly three days!

We were so pleased to hear of how Dan is able to make use of what is on offer from Advanced Research Computing, particularly to hear of how he has made use of the GPU’s on BlueBEAR – if you have any examples of how it has helped your research then do get in contact with us at bearinfo@contacts.bham.ac.uk. We are always looking for good examples of use of High Performance Computing to nominate for HPC Wire Awards – see our recent winners for more details.