Last week we attended the University’s Sustainability Town Hall where we could be found on the IT Services stand, discussing our response to the challenge of delivering sustainable supercomputing. On our agenda for more than a decade, the major breakthrough for BEAR came with the adoption of direct liquid cooling in 2015. Read on to find out more from Carol Sandys, Head of Advanced Research Computing…

Our services are used by a large number of researchers, from across the University

While our storage is used by over 4,000 researchers with around 3.5PB of active data, BEAR also provides ‘supercomputing’ capacity to more than 2,500 researchers. Prior to the widespread uptake of this central service, it was common for researchers to run their own servers, many at very low levels of utilisation with much of the collective capacity idle.

There is no question that powerful computing has environmental costs; materials are mined or made, equipment is manufactured and materials, kit and product have to be packaged and shipped; often across the globe. And then there are the supporting operations of the companies involved at all stages. Supercomputers need to be accommodated in purpose designed spaces and live in controlled environments. Once installed and commissioned, they are made ready for service; hopefully, to do something useful. In our case that is enabling the University’s research.

And there’s the first topic in the sustainability equation. The resources we deploy must be used for worthwhile purposes and that’s down to the research that is carried out, the outputs, its impact and re-usability. We see a huge range of projects and constantly marvel at the goals of our academic community – for examples of critical research using our HPC see genomic sequencing of >2 million Covid-19 samples and tracking air contamination in operating theatres.

Projects that will consume large resources have to pay for what they consume. To do that they necessarily have to make the case to their funder or justify the requirement to our new Strategic Resource Allocation Group (a sub-committee of RCMC).

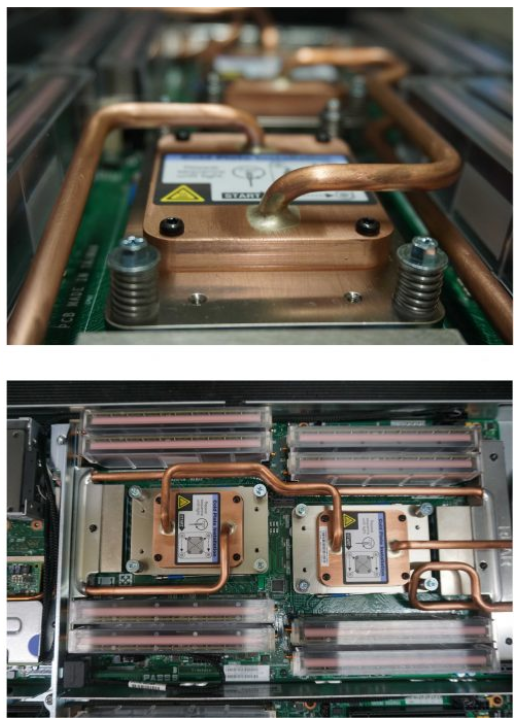

We run an efficient data centre with direct to node water cooling, eliminating the need for air conditioning

In 2018, the University opened a data centre, designed for intensive supercomputing operations and engineered for efficiency, which also meant not over-engineering the solution. The design means an acceptance of some risk (we might have to cap capacity during extended periods of >35C to reduce the cooling load). We could have built something bigger, used more materials, added more redundancy, added bigger pumps etc. but we decided against it on the grounds of keeping our consumption of land, power, materials and water to a sensible minimum. Estates worked with us all the way on this project.

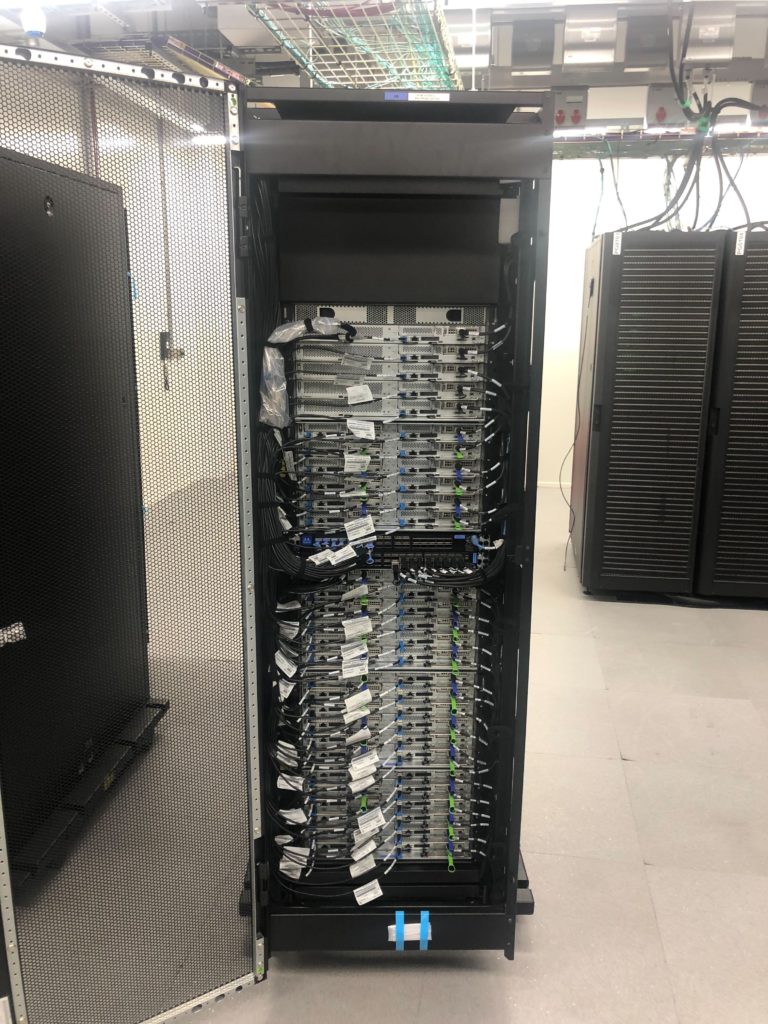

Also fundamental to the data centre design was the strategy to switch to computing kit that employs water cooling which runs through the compute units themselves… It’s plumbing Jim but not as you know it! We don’t attempt to keep the room cool instead, taking the heat away directly from the core of the equipment. This allows us to pack the data centre at huge density and deliver massive compute power from a small space and run the data centre at high utilisation levels; the closer it gets to full capacity, the more efficient the operation. We are doing well on this measure though do have to balance it with having the capacity to be able to be responsive and cater for new research projects. For more information on our data centre, including its green roof to encourage ecological diversity see our blog post.

We only use suppliers with good environmental credentials

In procuring the compute, storage and networking kit we deploy, for a long time now we have included a whole series of questions about the environmental credentials of our suppliers and mandated a set of criteria to ensure we are buying amongst the best kit available from an environmental impact perspective. When we first did this, we seemed to surprise our suppliers by asking these questions. Now it is the norm.

Our main suppliers, Lenovo, deliver ‘rack-shipped systems’ i.e. pre-assembled racks enabling us to save packaging. Unbelievably that adds up to 1.5 cubic metres of foam cushioning, 47kg cardboard and 80 linear metres of wood saved per rack!

See Lenovo’s video from ISC2022 for more details: https://www.youtube.com/watch?v=mo8tXC9mgo8

We reduce the use of old, inefficient kit and underused resources

We also consider the life cycle of the kit we buy and operate. This kind of technology moves incredibly fast and there is inherent obsolescence which is largely unavoidable. Older kit is radically less capable and less efficient than kit we can buy today. At a certain point it becomes unsupportable, unsuitable, insecure, expensive to operate and is simply abandoned. It also occupies scarce space in the data centre which we could be using for something far more productive. ‘Make do and mend’ sounds like a good idea but is largely a disaster for our kind of operation.

We train our users to write more efficient code and use the optimum software

Advanced Research Computing has a Research Software Group that builds applications to run on BEAR. This is another vital strand in the optimisation of efficiency and thus sustainability. The software chosen or code written will have a major impact on the amount of compute that needs to be applied to achieve results. Badly written code can be hugely unproductive and wasteful. Similarly, understanding how to target the right resources for the work makes a big difference. We offer training to help with this and are always available to advise.

Running an intelligent scheduling system that packs workloads on the system 24/7

HPC (High Performance Computing) is recognised as the most efficient way to do processing which is why EPSRC continues to fund national and regional facilities like Baskerville (the new accelerated compute facility, launched in 2021, and both designed and built by Advanced Research Computing). It relies on being able to pack workloads onto the system and so run at consistently high utilization levels 24*7. This is achieved by using an intelligent scheduling system. Some find this frustrating as their work doesn’t start instantly but in many many cases, the work will still complete much faster using BlueBEAR our powerful HPC service than a dedicated local set up. Institutionally it is an incredibly efficient resource and one we encourage any researcher with significant analysis, modelling, simulation, visualisation requirement to use. It eats up the work and is supported by an expert team of infrastructure and research software engineers.

So what about commercial cloud?

Perhaps I should start by saying that we can’t offshore/outsource the environmental impacts of our processing. Some commercial cloud operations are efficient and have access to advantages like operating in much cooler zones but many will not be more efficient than BEAR. You may be able to get your processing to start instantly but it will likely be at a significant cost and with limited support available unless you can pay for that too. Shipping data to the cloud can also be a challenge or a barrier; it certainly has environmental costs if it is at any scale. Institutionally and in general, given our scale, commercial cloud is not a sensible or cost-effective alternative to BEAR in the near future. We do anticipate implementing a scheme to allow BEAR to pull in top-up processing power to meet occasional and exceptional needs and take advantage of cloud spot pricing so we can do it if there are special offers that make the costs attractive too.

We provide an on-campus integrated storage and HPC system

Moving data around as in the case of shipping back and forth to the Cloud consumes power and resources and so has impact. One of the big advantages of BEAR is that it is a tightly integrated environment on campus where the data does not need to move. Data sitting in BEAR’s Research Data Store (RDS) can be processed on BlueBEAR using any of the centrally built applications, accessed from a BEAR cloud VM, from researcher’s desktops etc and is backed up routinely.

If data no longer needs to be accessed, we move it off to tape to prevent it consuming power

And then there is the data itself. There are costs, financial and environmental, for storing and backing up data. It’s justified where it is valuable or might be valuable in the future but how much useless data do we store? How do we work out which data have utility? I know this can be far from easy. We would always encourage researchers to consider archiving data from the RDS that is not in use or likely to be used in the next year or more – we now contact project owners every 6 months to make sure they still need their resources. We offer the BEAR Archive service that keeps the data on tape, which means it is no longer on a system that is burning power and using expensive enterprise class first line storage. This does not mean BEAR’s storage systems are inefficient; far from it. Like our computing infrastructure, the storage is water cooled, is built on highly efficient equipment and uses clever technologies to minimise duplication and the work it has to do.

Is there more ARC could do to make BEAR still more efficient and sustainable?

We continue to be conscious of the issues and working at it. The technology and opportunities continuously evolve. It’s been fantastic to see the innovation that has been stimulated by the sustainability questions over the last decade. We’d like to be able to seek out and re-engineer some of the software we see consuming more resource than it needs but that would need funding for more RSE time. Meanwhile we support and encourage the development of good practice for academics writing code, wrangling data and using the service.

Please join us in making BEAR the most sustainable it can be while delivering research that matters.

Update – January 2024

We held a Digital Research Conversation to discuss the topic of sustainable computing in December 2023 – find out more here: https://blog.bham.ac.uk/bear/2024/01/12/digital-research-conversations-sustainable-computing/

Update – September 2025

We’ve written an update to this blog post – see ‘How green is the BEAR? 2025 updates’.