Max Nathan is a City-REDI affiliate, and a Senior Birmingham Fellow in Regional Economic Development, based in the Business School. He is also a Deputy Director of the What Works Centre for Local Economic Growth. Here he discusses what the What Works Centre has learnt so far in the three years they’ve been running.

Two weeks ago we were in Manchester to discuss the Centre’s progress (the week before we held a similar session in Bristol). These sessions are an opportunity to reflect on what the Centre has been up to, but also to think more broadly about the role of evidence in policy in a post-experts world. In Bristol we asked Nick Pearce, who ran the No 10 Policy Unit under Gordon Brown, to share his thoughts. In Manchester we were lucky to be joined by Diane Coyle, who spoke alongside Henry and Andrew on the platform.

Here are my notes from the Manchester event:

Evidence-based policy is more important than ever, Diane pointed out. For cash-strapped local government, evidence helps direct resources into the most effective uses. As devolution rolls on, adopting an evidence-based approach also help local areas build credibility with central government departments, some of whom remain sceptical about handing over power. Greater Manchester’s current devolution deal is, in part, the product of a long term project to build an evidence base and develop new ways of working around it.

A lack of good local data exacerbates the problem, as highlighted in the Bean Review. The Review has, happily, triggered legislation currently going through House of Commons to allow ONS better access to administrative data. Diane is hopeful that this will start to give a clearer picture of what is going on in local economies in a timely fashion, so the feedback can be used to influence the development of programmes in something closer to real time.

Diane also highlighted the potential of new data sources — information from the web and from social media platforms, for example — to inform city management and to help understand local economies and communities better. We think this is important too; I’ve written about this here and here.

So what have we done to help? Like all of the What Works Centres, we’ve had three big tasks since inception: to systematically review evaluation evidence, to translate those findings into usable policy lessons, and to work with local partners to embed those in everyday practice. (In our case, we’ve also had to start generating new, better evidence, through a series of local demonstrator projects.)

Good quality impact evaluations need to give us some idea about whether the policy in question had the effects we wanted (or had any negative impacts we didn’t want). In practice, we also need process evaluation — which tells us about policy rollout, management and user experience — but with limited budgets, WWCs tend to focus on impact evaluations.

In putting together our evidence reviews, we’ve developed a minimum standard for the evidence that we consider. Impact evaluations need to be able to look at outcomes before and after a policy is implemented, both for the target group and for a comparison group. That feels simple enough, but we’ve found the vast majority of local economic growth evaluations don’t meet this standard.

However, we do have enough studies in play to draw conclusions about more or less effective policies.

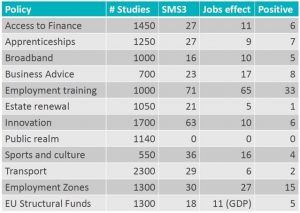

The chart above summarises the evidence for employment effects: one of the key economic success measures for LEPs and for local economies.

First, we can see straight away that success rates vary. Active labour market programmes and apprenticeships tend to be pretty effective at raising employment (and at cutting time spent unemployed). By contrast, firm-focused interventions (business advice or access to finance measures) don’t tend to work so well at raising workforce jobs.

Second, some programmes are better at meeting some objectives than others. This matters, since local economic development interventions often have multiple objectives.

For example, the firm-focused policies I mentioned earlier turn out to be much better at raising firm sales and profits than at raising workforce head count. That *might* feed through to more money flowing in the local economy — but if employment is the priority, resources might be better spent elsewhere.

We can also see that complex interventions like estate renewal don’t tend to deliver job gains. However, they work better at delivering other important objectives — not least, improved housing and local environments.

Third, some policies will work best when carefully targeted. Improving broadband access is a good example: SMEs benefit more than larger firms; so do firms with a lot of skilled workers; so do people and firms in urban areas. That gives us some clear steers about where economic development budgets need to be focused.

Fourth, it turns out that some programmes don’t have a strong economic rationale — but then, wider welfare considerations can come into play. For example, if you think of the internet as a basic social right, then we need universal access, not just targeting around economic gains.

This point also applies particularly to area-based interventions such as sports and cultural events and facilities, and to estate renewal. The evidence shows that the net employment, wage and productivity effects of these programmes tends to be very small (although house price effects may be bigger). There are many other good reasons to spend public money on these programmes, just not from the economic development budget.

Back at the event, the Q&A covered both future plans and bigger challenges. In its second phase, the Centre will be producing further policy toolkits (building on the training, business advice and transport kits already published). We’ll also be doing further capacity-building work and — we hope — further pilot projects with local partners.

At the same time, we’ll continue to push for more transparency in evaluation. BEIS is now publishing all its commissioned reports, including comments by reviewers; we’d like to see other departments follow suit.

At the Centre, we’d also like to see wider use of Randomised Control Trials in evaluation. Often this will need to involve ’what works better’ settings where we test variations of a policy against each other — especially when the existing evidence doesn’t give strong priors. For example, Growth Hubs present an excellent opportunity to do this, at scale, across a large part of the country.

That kind of exercise is difficult for LEPs to organise on their own. So central government will still need to be the co-ordinator — despite devolution. Similarly, Whitehall has important brokering, convening and info-sharing roles, alongside the What Works Centres and others.

Incentives also need to change. We think LEPs should be rewarded not just for running successful programmes — but for running successful evaluations, whether or not they work.

Finally, we and other Centres need to keep pushing the importance of evidence, and to as wide a set of audiences as we can manage. Devolution, especially when new Mayors are involved, should enrich local democracy and the local public conversation. At the same time, the Brexit debate has shown widespread distrust of experts, and the ineffectiveness of much expert language and communication tools. The long term goal of the Centres — to embed evidence into decision-making — has certainly got harder. But the community of potential evidence users is getting bigger all the time.

For the original piece that was posted on Medium see here.

Earlier versions of this piece were also posted on the What Works Centre blog.