Artificial Intelligence (A.I.) is tipped as a game-changer; the biggest technological phenomenon since the invention of the World Wide Web. However, experts cannot agree on how things will be different, and especially when things will be different. The discussion on the future of A.I. is charged with excitement, but also fear. The tech entrepreneur Elon Musk, almost a demigod figure on the internet amongst younger audiences, called humanity’s current approach to A.I. as “summoning the demon” as a reference to the dangers of super-intelligence if we are not careful in our approach to autonomous, intelligent machines. However, when we hear other big players on the other side of the debate, it makes it difficult to be certain of a future with A.I. as people such as Mark Zuckerberg highlight all the benefits of A.I. to be essential in the development of the human race. It seems that we are cautiously optimistic, and to understand in greater context what A.I. can offer, we must look into the timeline of A.I. so far.

Artificial Intelligence (A.I.) is tipped as a game-changer; the biggest technological phenomenon since the invention of the World Wide Web. However, experts cannot agree on how things will be different, and especially when things will be different. The discussion on the future of A.I. is charged with excitement, but also fear. The tech entrepreneur Elon Musk, almost a demigod figure on the internet amongst younger audiences, called humanity’s current approach to A.I. as “summoning the demon” as a reference to the dangers of super-intelligence if we are not careful in our approach to autonomous, intelligent machines. However, when we hear other big players on the other side of the debate, it makes it difficult to be certain of a future with A.I. as people such as Mark Zuckerberg highlight all the benefits of A.I. to be essential in the development of the human race. It seems that we are cautiously optimistic, and to understand in greater context what A.I. can offer, we must look into the timeline of A.I. so far.

The Historical Development of A.I.

A.I. as we currently define it can be traced back to the 1950s with the research of John McCarthy and Marvin Minsky, from the Massachusetts Institute of Technology. This was the first school of A.I. being referred to as Classical Artificial Intelligence. This branch of A.I. takes the approach of trying to replicate human thinking and behaviour, such as, smart conversation robots that can converse with a real human. While advances have been made in language translation and playing games, this area of A.I. has been disappointed in reaching its goals. Since the 1960s, a new approach emerged in developing A.I. preferring to augment the intelligence of humans rather than trying to imitate it. Simply called Augmented A.I., this area of A.I. has achieved more of its goals than its predecessor, but set much less ambitious goals in the first place. It has been behind why smartphones are tantalising to the human brain as it learns what is pleasurable and addictive to the human brain through algorithms.

Fast forward to the mid-1980s and deep learning emerged as a new technique due to advances in technology and building on the research in the decades before. Sometimes referred to as machine learning (deep learning is a subset of machine learning), deep learning can also perform its own learning by feeding in collected information to refine its calculations for the next run. Deep learning is promising in that it can be used to tackle problems that humans find difficult, and can feed through vast swathes of data to calibrate, optimise and ultimately evolve its own intelligence.

The most recent edition to the A.I. club is collective A.I., also known as, “artificial artificial intelligence”. The pillar of thought in this branch revolves around the idea that one person’s knowledge is limited to what that person has learned in their life, but assembling enough individuals together in a group vastly increases the chances that the group has a very rich understanding of many topics. The result is a unique method of analysis, reasoning and knowledge bank to draw information from and the final product is a group that MIT professor Tom Malone calls “groups of individuals acting collectively in ways that seem intelligent.” One of the most well-known collective A.I. platforms is Wikipedia, which relies on thousands of editors on each of its topics.

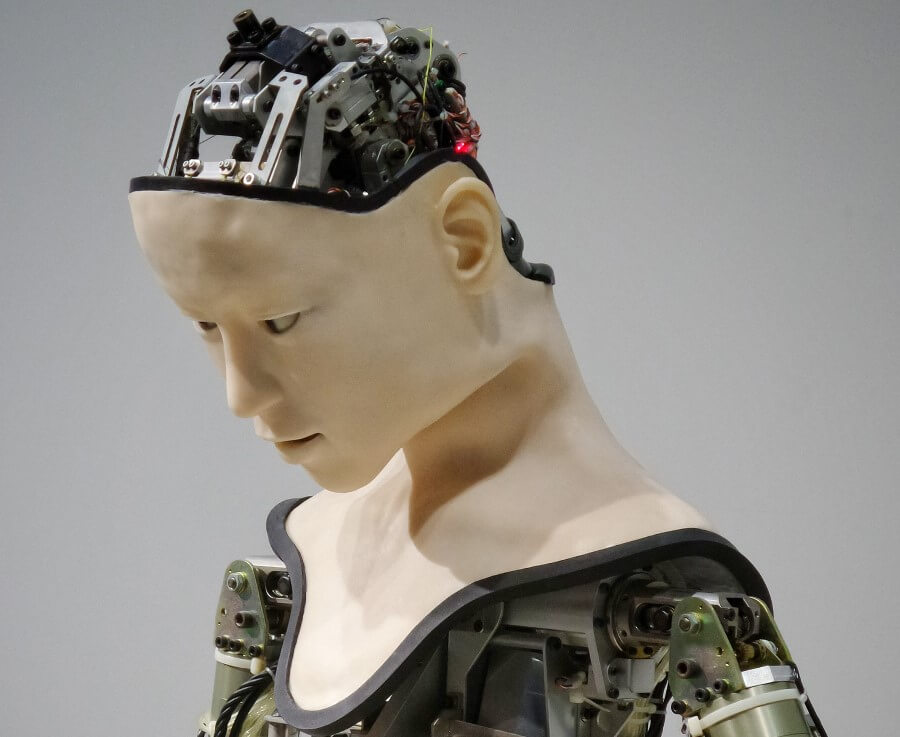

The evolution of A.I. in our increasingly computerised world hasn’t transcended the early Sci-Fi visions of machines becoming just like humans. The trajectory has been much more complex as A.I. seeks to work symbiotically with humans each adhering to each other’s strengths. Humans can recognise complex patterns such as social cues and rely on intuition, but struggle with memory and complex repetitive tasks. Further still, a combination of the major branches of A.I. could be the most fruitful in its effect on the world. Classical A.I. could simulate human intelligence with computer systems, which then can be augmented with a different method of A.I.; which can then use reams of data to figure out the most efficient method of performing such tasks, and finally using the many lessons from individuals to combine into conventional wisdom for the A.I.

With such an approach becoming more prevalent in society, it is therefore very unlikely that A.I. will be replacing humans anytime soon, but as Professor Stephen Hawking depicted, the role of human beings is certainly expected to change. Upon its impact on the economy the IEEE Computer Society said the following:

“Computer technology tends to create a few new jobs that require sophisticated skills and many jobs that require low-level skills, while it reduces mid-skill jobs”

What could this mean for economies and society? Are we expecting a hollowing out of the workforce? In the next blog of this series, we’ll explore the theme of the impact of A.I. in the global economy and what countries are doing behind the scenes to ensure that they are not being left behind.

Sources

IEEE Computer Society (2020), Debating Artificial Intelligence

New York Times (June 9, 2018), Mark Zuckerberg, Elon Musk and the Feud Over Killer Robots

Technology Review (March 27, 2020), A debate between AI experts shows a battle over the technology’s future

This blog was written by Josh Swan, Policy and Data Analyst, City-REDI.

Disclaimer: The views expressed in this analysis post are those of the authors and not necessarily those of City-REDI or the University of Birmingham.

To sign up for our blog mailing list, please click here.