This General Election has been full of surprises. So I’ve been digging into the YouGov MRP voting model, pretty much the only one that got the 2017 Election result correct.

Given all the current humble pie and book eating by pundits who didn’t spot the result coming, this seems worth doing. I also think there are also some useful takeaways for cities, especially as devolution rolls on.

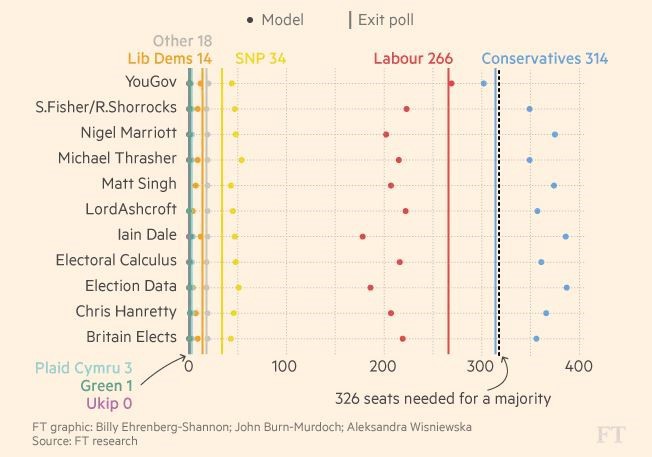

YouGov’s MRP (multilevel regression and post-stratification) method not only got the national result right, but correctly predicted results in 93% of seats. Compare this to most other polls [£, and chart below]. The model somehow also predicted the Canterbury result, where Labour won for the first time since 1918.

It turns out that earlier versions of an MRP model also spotted the Leave vote in 2016, and did pretty well in the US 2016 Presidential Election. Though this version seems to have worked better, for reasons I’ll come back to.

Remember, this is a predictive method — how might people vote in the future? — that did about as well as the main exit poll — which asked people *how they just voted*. (John Curtice has more on how UK exit polling is done here.)

So how does the MRP model work? Here’s an overview. YouGov describe this as a Big Data approach; it seems to involve bespoke data and data science methods, but also lots of public datasets, aka ‘administrative Big Data’. The key steps seem to be:

1/ YouGov have weekly individual-level data on voting intention and detailed characteristics (including past voting). They run around 50k online interviews per week, and anyone can sign up;

2/ They use this to build a typology of voter types;

3/ For each voter type, they then fit a model that predicts voting intention;

4/ For each constituency, they then estimate how these types are spread (using public resources like the British Election Study and other ONS resources, perhaps these);

5/ They work out how the vote should go in each constituency.

By contrast, traditional polls tend sample about 1,000 people, then project direct from respondents to the whole UK, using weights to compensate for demographics, voting intention and so on.

This helps us see why an MRP approach might work better than conventional methods:

- First, MRP has a much bigger starting sample. More observations = sharper results.

- Second, MRP is micro-to-macro: it models each constituency individually, so stands a better chance of picking up local issues (such as the hospital closure crisis which helped drive the Canterbury result).

- Third, MRP is both fine-grained and high-frequency. The only pundits to pick up on the reality of #GE2017 got out there on the ground. Given the complexity of UK politics right now, we also need methods to get at this complexity in a structured way.

- Fourth, MRP methods should get better over time. you end up with loads of high-frequency training data, and this progressively makes the model better.

This doesn’t mean that conventional polling has had its day – e.g. Survation were also on the money. But it’s notable that most conventional polls fell over this time, just as they did in the 2015 General Election.

I suspect that these four factors helped YouGov pick up higher turnout for younger voters faster than most pollsters (and many mainstream journalists), as well as shifts in other age groups. This post by Ben Lauderdale, one of their chief modellers, seems to supports that. (Note that we won’t know turnout by age for sure until the next BES in a few months. If modelling can get us to a decent understanding faster, that’s very useful.)

As Sam Freedman points out, it also helps show precisely, and in close to real time, the huge damage the Conservative manifesto did to the party’s chances.

Micro-to-macro techniques like MRP could be useful for Mayoral elections and city politics. With a 50k in sample, could you train the model on a city-region like the West Midlands using public data? If so, this feels much more useful and adaptable than one-off traditional polling.

YouGov say their model works at local authority level, so some version of this could probably be done now. However, I suspect that even a big national sample might be too sparse for very local analysis, say at neighbourhood level. In this latter case, you could also imagine building a richer, locally-specific model for a whole conurbation — like the West Midlands or Greater Manchester — using a big base of local respondents.

This would be expensive — but for a local university, or a group of them, it would be a super interesting (and public-spirited) long term investment.

Birmingham University’s city-regional lab City-REDI will be exploring this further in the coming months.

Thanks to Rebecca Riley for comments on an earlier draft.

For the original piece posted by @iammaxnathan, please see here.